Claude 3, Anthropic's newest and most advanced Large Language Model (LLM), rivals advanced and popular AI models on the market in both performance and capabilities and Llama 2 is no exception. Anthropic's Claude 3 model has managed to outperform the Llama 2 model, as well as other popular and advanced LLMs.

In this article, we will explore the Claude 3 and Llama 2 models and the differences between them.

Ready? Let's dive in!

TL; DR

- Claude 3 is the newest and most advanced large language model developed by Anthropic and introduced on March 4, 2024.

- Claude comes with 3 different sizes designed for 3 different tasks; Haiku, Sonnet, and Opus.

- To access Claude 3 models, you can use Anthropic's official website or head to TextCortex, which combines it with advanced features.

- Llama 2 is a large language model developed by Meta AI that comes in three different model sizes: 7B, 13B and 70B.

- Although the Claude 3 model is superior to the Llama 2 model in terms of performance and context window, Llama 2 is free for research and commercial use.

- If you're looking for a better conversational AI experience than Claude 3 and Llama 2, ZenoChat by TextCortex is the way to go.

Claude 3 Review

Claude 3 is a model developed by Anthropic that manages to surpass the most advanced large language models. With Claude 3, you can generate new and unique text or code-based output, experience human-like conversation or automate your repetitive mundane tasks.

Claude 3 Model Sizes

Claude 3 Large Language Model comes with three different model sizes designed for different tasks and use cases:

- Haiku: Haiku, the smallest member of the Claude 3 family, is designed to automate basic-level tasks.

- Sonnet: The Sonnet model, the middle of the Claude 3 family, is designed to complete mid-level tasks and has higher performance than the GPT-3.5 model.

- Opus: The Opus model, the largest member of the Claude 3 family, is designed to complete highly complex tasks and is more effective than GPT-4.

Claude 3 Pricing

While you do not need to pay any fee to use the Claude 3 Sonnet model, you must have a $20 Claude Pro subscription to use the Claude 3 Opus model as an AI chatbot. If you want to use Claude 3 models as an API, pricing is as follows:

- Claude 3 Haiku: $0.25 per million token input, $1.25 per million token output

- Claude 3 Sonnet: $3 per million token input, $15 per million token output

- Claude 3 Opus: $15 per million token input, $75 per million token output

How do I access Claude 3?

To access the Claude 3 model, all you have to do is create an Anthropic account. Afterwards, you can start experiencing Claude 3 models by heading to the claude.ai web interface.

Another way to experience the Claude 3 models is ZenoChat by TextCortex. With ZenoChat, you can experiment with all Claude 3 models including Opus, Sonnet and Haiku.

What is Llama 2?

Llama 2 is one of the popular large language models developed and introduced by Meta AI. The Llama 2 model is designed to respond to harmless and helpful output by analysing users' input. In addition, the Llama 2 model is also a useful LLM for code generation tasks. Moreover, Llama 2 is free for research and commercial use.

Llama 2 Model Sizes

The Llama 2 model comes with three models with different parameter sizes: 7B, 13B, and 70B. These models have been trained using techniques such as natural language processing, reinforcement learning from human feedback, reward models, and machine learning. Although these models can compete with the GPT-3.5 model, Claude 3 models have higher performance.

What can Llama 2 Generate?

With the Llama 2 model, you can generate text-based outputs such as stories, poems, articles, blog posts, essays, and emails. Moreover, you can use it to rewrite your existing texts or translate them into another language.

Moreover, the Llama 2 model offers its users the opportunity to generate output in coding languages. Using Llama 2, you can generate output for any coding language or complete your prompt engineering tasks.

How to Access Llama 2?

You can access the Llama 2 model and its 3 different sizes via Meta AI's official website. Moreover, you can download these models and use them for your research or commercial uses.

Anthropic’s Claude 3 vs. Meta AI’s Llama 2 Comparison

We now have basic information about Anthropic's Claude 3 model and Meta AI's Llama 2 model. Next, we can compare these two large language models from different aspects and discover which one is ideal for you!

Performance Difference

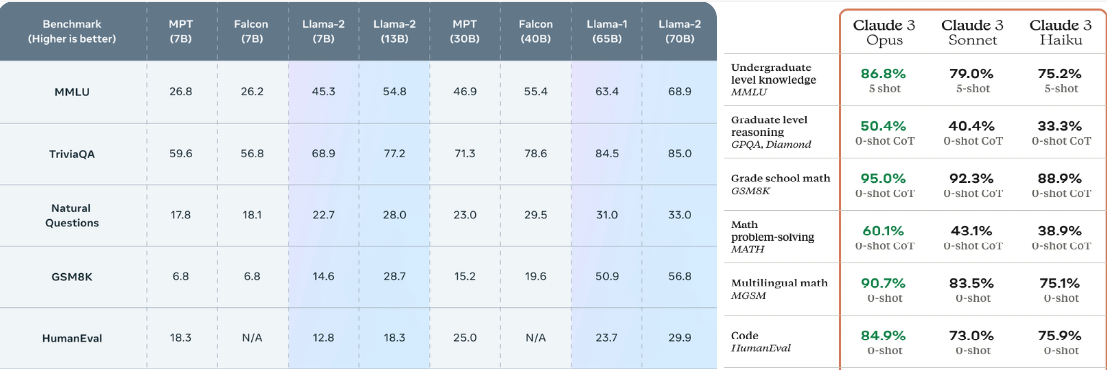

All sizes of the Claude 3 model have higher scores in benchmarks than the Llama 2 model. For example, while the 70B, which is the most advanced size of the Llama 2 model, has a score of 68.9% in the MMLU benchmark, Haiku, the smallest size of the Claude 3 model, has a score of 75.2% in the same benchmark. We can see the same score difference in other performance benchmarks such as GSM8K and HumanEval. In other words, the Claude 3 model outperforms the Llama 2 model in terms of performance.

Context Window

While Llama 2 models offer users a context window of 4096 tokens, Claude 3 models offer a context window of 200K tokens. In this comparison, Anthropic offers a larger context window than the Llama 2 models, even with the Claude 2 model. However, it widens this difference considerably with the Claude 3 model. In other words, if you have tasks that require you to work with large amounts of data, Claude 3 is a better choice.

Pricing

While you have to pay a fee to use the Claude 3 model as an API, you can use and integrate the Llama 2 model for free. On the other hand, you can chat for free via claude.ai with the Claude 3 Sonnet model, which offers higher performance than all sizes of the Llama 2 model. However, if you want to chat with an AI chatbot using the Claude 3 Opus model, you need to have a $20 Claude Pro subscription.

A Better Alternative for Both: ZenoChat

If you are looking for a conversational AI assistant that offers more features and advanced capabilities than both Claude 3 and Llama 2, ZenoChat is designed for you. ZenoChat is a conversational AI designed to help users complete their daily and professional tasks, reducing their workload and boosting productivity. ZenoChat is available as a web application and browser extension. The ZenoChat browser extension is integrated with 30,000+ websites and apps, so it can support you anywhere and anytime.

ZenoChat offers a fully customizable AI experience through our “Individual Personas” and “Knowledge Bases” features. Our “Individual Personas” feature allows you to adjust ZenoChat's output style, tone of voice, personality, attitude, and the dominant emotion in its responses as you wish. In other words, with this feature, you can build ZenoChat as you wish.

Our “Knowledge Bases” feature allows you to upload or connect the datasets that ZenoChat will use when producing output. Using this feature, you can train ZenoChat for your specific taxis, chat with your PDFs, or summarize large amounts of data with a single prompt.

%20(1).png)

%20(13).png)

%20(12).png)

%20(11).png)