Meta AI released Llama 3, the most advanced version of the large language model they developed, on April 18, 2024. Llama 3 is a large language model that has high performance compared to its competitors and is trained with a dataset selected with special filtering methods. The Llama 3 model comes in two different sizes: 8B and 70B. Both Llama 3 models are suitable for different use cases. If you are curious about the Llama 3 model and wondering how you can access it, we've got you covered!

In this article, we will examine what the Llama 3 model is and how you can access it.

Ready? Let's dive in!

TL; DR

- The Llama 3 is a large language model developed by Meta AI and announced on April 18, 2024.

- Llama comes in two different sizes adapted to three different use cases: 8B and 70B.

- Llama 3 uses technologies such as natural language processing (NLP), machine learning, and deep learning to generate output.

- Since the Llama 3 model is open-source, it is free to use.

- The Llama 3 model has higher performance in most benchmarks than its rival models such as GPT-3.5 and Claude 3 Sonnet.

- The Llama 3 model was trained using high-quality data from 30+ languages.

- To access the Llama 3 model, you must log in to your Meta AI account from the country where it is available.

What is Llama 3?

Llama 3 is a Large Language Model (LLM) developed by Meta AI with higher performance than its predecessor. When announcing Llama 3, Meta AI stated that it was trained with fine-tuned parameters. The Llama 3 model has improved reasoning, language understanding, prompt reading, and coding skills compared to its predecessor. Meta AI aims to kickstart the next wave of innovation in artificial intelligence with the Llama 3 model.

Llama 3 Model Sizes

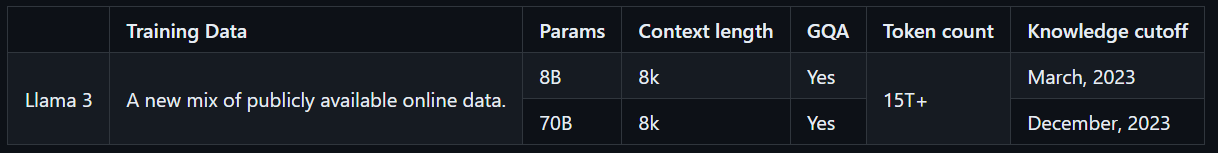

The Llama 3 model is available in two sizes, customized for different use cases. The 8B model is ideal for completing simple tasks quickly and accurately, while the 70B model is designed to handle larger, more complex tasks with high-quality results. Both models have been trained with over 15T tokens, which is 7 times more than the tokens used to train the Llama 2 model. Additionally, the Llama 3 models have multilingual capabilities, thanks to being trained with high-quality non-English data.

How Does Llama 3 Work?

The Llama 3 model generates output using AI technologies such as Natural Language Processing (NLP), deep learning and machine learning. LLama 3 model analyses the inputs entered by the user with its trained data and parameters, understands the user's intent and generates the outputs it needs.

The Llama 3 model has been trained using data that has passed through special filtering systems to ensure safe and appropriate output. This includes the use of an NFSW filter, heuristic filter, semantic deduplicated approach, and text classifier. Furthermore, the Llama 2 model, which preceded the Llama 3 model, was utilized in selecting the data used in training the Llama 3 model due to its success in identifying high-quality data.

Is Llama 3 Free to Use?

Meta AI has always advertised its Llama model series as open-source and free to use, and Llama 3 is no exception. Since the currently released Llama 3 8B and 70B models are open-source, anyone can experience these models. However, it is not yet clear whether the Llama 3 400B, which is still in the training process and is the most advanced member of the Llama 3 series, is free to use.

Llama 3 Features

Llama 3 was released with two different models that have higher performance than their competitors. The reason why these two models have higher performance than their competitors is that they have been trained with data identified through special filtering. The Llama 3 model has unique features compared to other LLMs on the market. Let's take a closer look at the features of Llama 3.

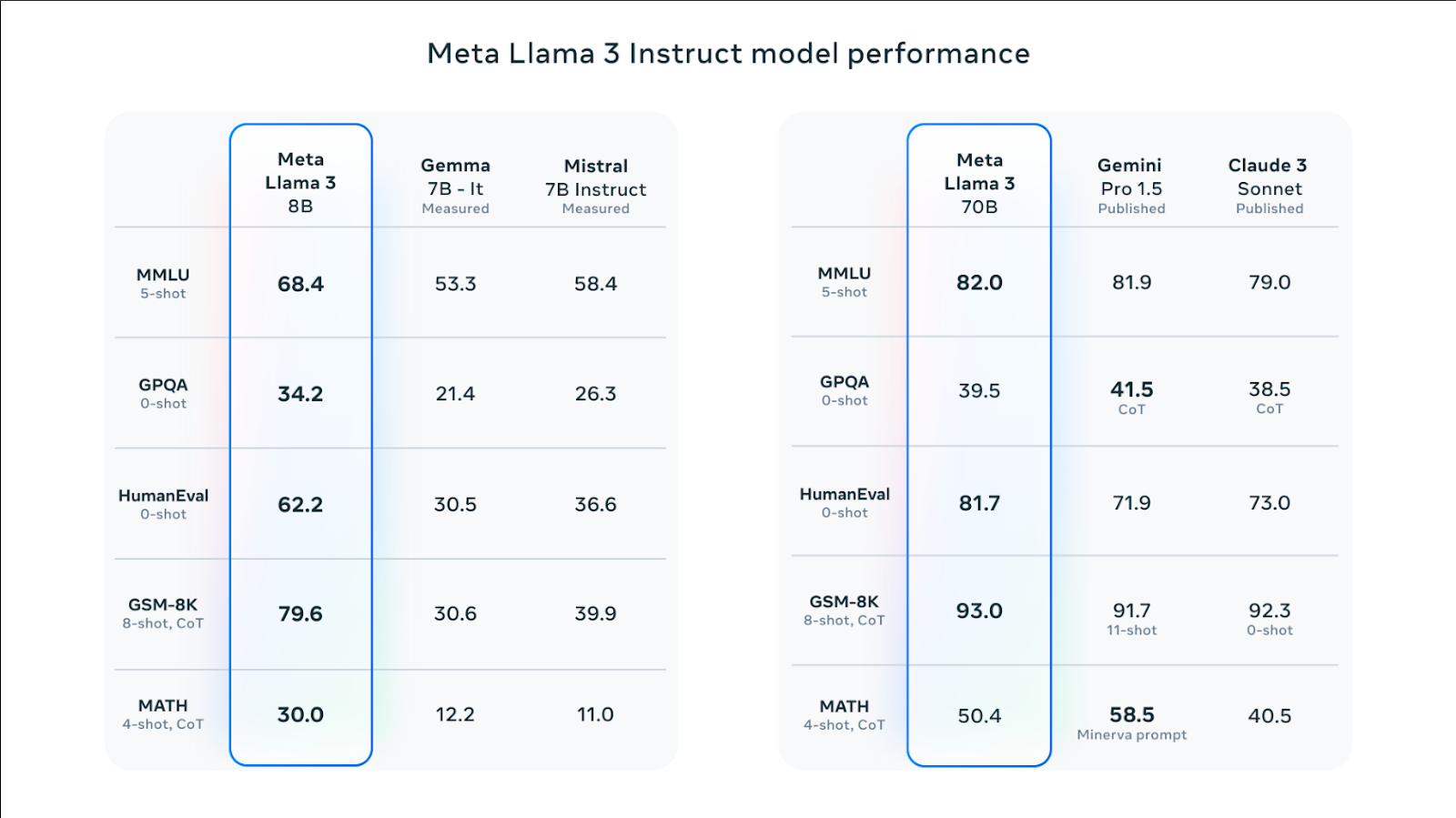

Performance and Benchmarks

Both Llama 3 8B and Llama 3 70B models have higher scores in benchmarks such as HumanEval, MMLU and DROP compared to their competitors. For example, the Llama 3 70B model has a slightly higher performance in the MMLU benchmark compared to its equivalent models Gemini Pro 1.5 and Claude 3 Sonnet. The Llama 3 8B model has a higher overall performance than its competitors, the Gemma 7B and Mistral 7B models.

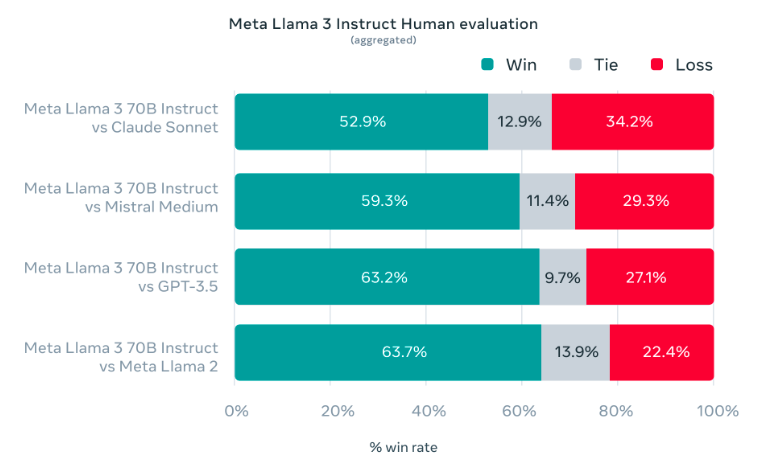

While developing the Llama 3 model, it was aimed to demonstrate high performance in real-life use cases rather than laboratory environment tests. For this reason, the Meta AI team has developed a new, high-quality human evaluation set. This evaluation set consists of 1800 prompts covering 12 key use cases. Prompts include tasks such as asking for advice, coding, brainstorming, creative writing, Q&A, reasoning, rewriting, and summarization. Meta AI's LLama 3 model showed higher performance in these tests compared to its rivals Claude Sonnet, GPT-3.5 and Mistral Medium.

Prompt Understanding

Since the Llama 3 model is trained using supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF), it is a more successful model in prompt following than its predecessor. While training the Llama 3 model, it was prioritized to generate helpful and safe outputs.

5% of the Llama 3 model's trained data covers high-quality grammar, sentence structure and creative writing in 30+ languages. For this reason, the Llama 3 model can analyse the prompts given by the user in other languages and understand the prompts of the users in other languages.

Model Architecture

Decoder-only transformer architecture was used when training the Llama 3 model. According to Meta AI's article, Llama 3 uses a tokenizer with a vocabulary of 128K tokens that encodes language much more efficiently, which leads to substantially improved model performance. Both the 8B and 70B sizes of the Llama 3 model were trained in sequences of 8,192 tokens.

Meta AI used a combination of data parallelization, model parallelization, and pipeline parallelization to train the Llama 3 model. Therefore, it aims to reduce the workload while increasing the processing speed of the Llama 3 model on GPUs. The system on which the Llama 3 model works most effectively is 400 TFLOPS per GPU. The Llama 3 model uses error detection, handling, and maintenance systems to maximize GPU speed while running.

How to Access Llama 3?

The Llama 3 model is an effective solution for completing small-scale tasks. Its major advantages are that it outperforms Claude Sonnet and GPT-3.5 models and that it is open-source. Let's take a closer look at how to access Llama 3.

Experience Llama 3 on Meta AI

You can access the Llama 3 model via Meta AI. All you have to do is head to Meta AI's official website, create an account and request access permission. However, the Llama 3 model is only available outside the US in Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe. If you are not one of the mentioned countries, you will see the message "Meta AI isn't available yet in your country".

%20(11).png)

%20(15).png)

%20(14).png)