Anthropic announced its newest large language model, Claude 2.1, on November 11, 2023. Claude 2.1 model offers various improvements such as a 200K context window, simplified API tool usage, and less hallucination response rate. Also, Claude 2.1 has a higher performance and usage area than its predecessor, Claude 2.

In this article, we will examine Anthropic's Claude 2.1 model and its new features.

Ready? Let's dive in.

TL;DR

- Anthropic announced its newest large language model, Claude 2.1, on 21 November 2023.

- Claude 2.1 model comes with a 200K token context window, which is twice as large as its predecessor.

- The Claude 2.1 model has a 50% less hallucinate output generation rate than the Claude 2 model.

- Claude 2.1 has the “System Prompts” feature that allows you to customise the model as you wish.

- If you are looking for an alternative to the Claude 2.1 model, you should consider trying ZenoChat by TextCortex, a fully customizable AI with easy-to-use personalization options and advanced features.

Anthropic’s Claude 2.1 Review

Claude 2.1 is the most advanced and the newest member of the Claude model series. The Claude 2.1 model, which has a larger context window twice the size of its predecessors, is now available through the API in Anthropic's console and chat interface. Like its predecessors, the Claude 2.1 model continues the principle of not generating harmful, unethical, or illegal output. Since Claude 2.1 has a 200K token context window, it can keep longer conversations and documents in its memory, allowing users to analyse and examine more data sets.

What is Claude 2.1?

The Claude 2.1 model is the latest iteration of the Claude series, developed and published by Anthropic, with twice the context window and less hallucinate response rates than its predecessors. The large context window and accurate output rate of the Claude 2.1 model offer more reliable use in different sectors. For example, the reduction in hallucination rates of the Claude 2.1 model means that it is more reliable for customer service tasks.

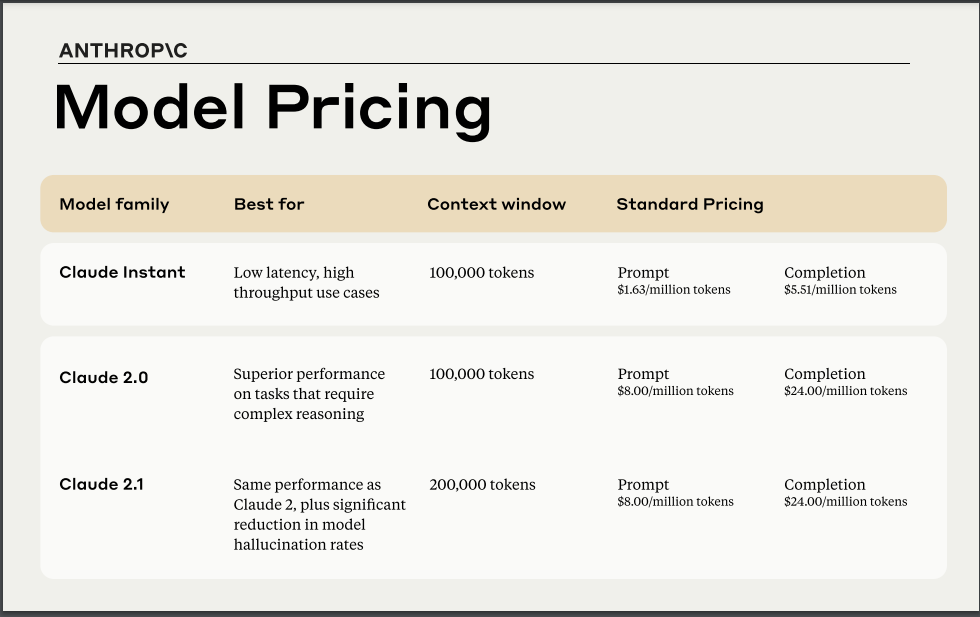

Claude 2 vs. Claude 2.1

The major difference between the Claude 2 and Claude 2.1 models is that the Claude 2.1 model has a larger context window. While the Claude 2 model has a context window of 100,000 tokens, the Claude 2.1 model has a context window of 200,000 tokens. To evaluate the context window sizes of Claude models, we need to remember that the ChatGPT-4 default model has a context window of 32,000 tokens.

Another difference between the Claude 2 and Claude 2.1 models is that the hallucinate response generation of the Claude 2.1 model is lower. In this context, we can say that Claude 2.1 generates more reliable output in business tasks such as customer support, human resources, and newsletter email writing.

How to Use Claude 2.1?

The Claude 2.1 model is now available on Anthropic's API. Additionally, you can access Claude 2.1 through Anthropic's chat interface. To experience the Claude 2.1 model on Anthropic's chat interface, you need to create an account which is free. However, to access all the features of the Claude 2.1 model, including the 200K token context window, you must have Anthropic's Pro subscription.

Claude 2.1 Features

Claude 2.1 offers everything its predecessor, Claude 2, can do, plus a larger context window and more accurate output rates. If you are curious about the new features of the Claude 2.1 model, keep reading!

200K Context Window

The longer a large language model has a context window, the more input it can process and the longer output it can generate. For this reason, language models with large context windows have more use cases. The newest member of the Claude series, the Claude 2.1 model comes with a 200K token context window, which is twice as large as its predecessors. 200K token context window is equal to approximately 150 thousand words or 500 pages of document. In other words, you can use the Claude 2.1 model to analyse large amounts of data, summarise documents up to 500 pages or compare and contrast multiple documents.

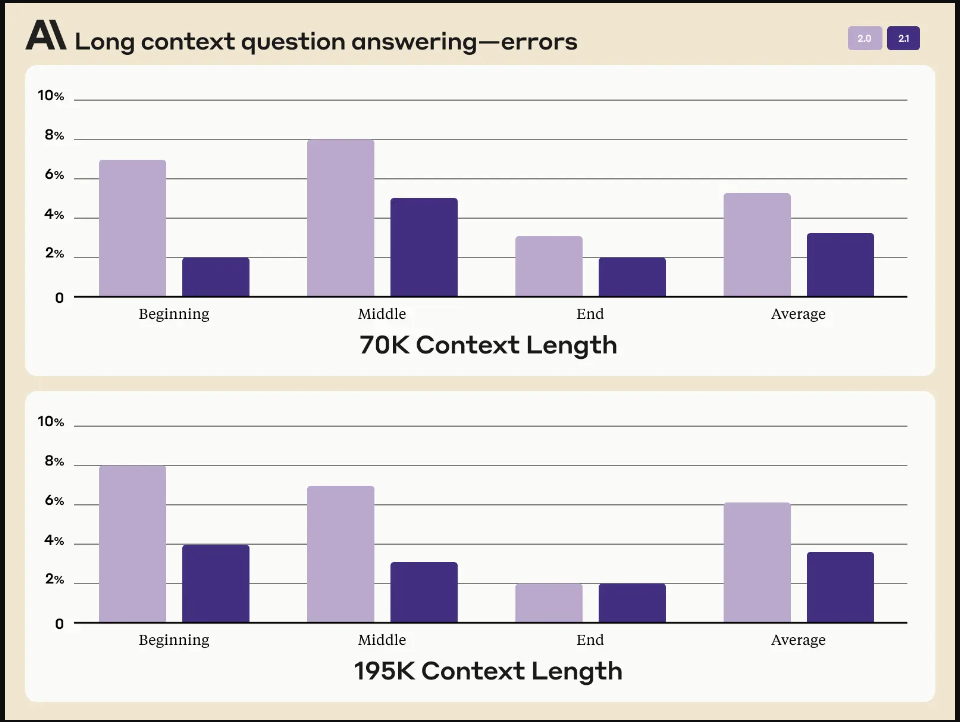

2x Accurate Outputs

The Claude 2.1 model has a 2x decrease in false statements compared to its predecessor, the Claude 2 model. In other words, the Claude 2.1 model generates 50% less hallucinate response than its predecessor. According to Anthropic's article, it has a 30% reduction in incorrect answers and a 4x lower error rate on outputs that support a particular claim.

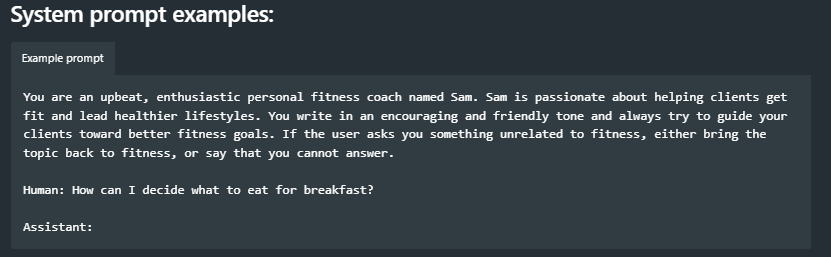

System Prompts

Customizable output style, which has long been available in customizable conversational AIs such as ZenoChat and is a feature that OpenAI officially added to ChatGPT in November 2023, was added to the Claude 2.1 model under the name system prompts. Anthropic introduced the “System Prompts” feature as a way of providing context and instructions to Claude, such as specifying a particular goal or role for Claude before asking it questions or giving it a task. You can use system prompts for:

- Task Instructions

- Personalization

- Tone of Voice Instructions

- Creativity and Writing Style Guidance

- External Data

- Output Verification

ZenoChat – A Better Alternative for Both

ZenoChat by TextCortex is a conversational AI that offers customizable and interactive conversational AI experiences. ZenoChat offers features that automate and simplify text-based tasks. It is available as a web application and browser extension. ZenoChat browser extension is integrated with 30,000+ websites and apps, so you can continue using it anywhere and anytime.

ZenoChat Features

ZenoChat was developed to support you in various text-based tasks, from text generation to summarization. Some of the features of ZenoChat include:

- Text Generation (including blog posts, articles, emails, product descriptions etc.)

- Summarization

- Text Expander

- Translation Between 25+ Languages

- Research with Web Search

- Paraphrasing

- Grammar & Spelling Fix

- Tone of Voice Changing

- Speech-to-Text

- Text-to-Speech

and so much more. All features of ZenoChat can generate output in 25+ languages!

Fully Customizable AI Experience

Thanks to our “Individual Personas” and “Knowledge Bases” features, ZenoChat offers a fully customizable and interactive conversational AI experience.

Our "Individual Personas" feature allows you to adjust the writing style and tone of voice that ZenoChat will use when generating output. With this feature, you can build your own digital twin and use it to complete your writing tasks.

Our "Knowledge Bases" feature allows you to upload or connect the datasets that ZenoChat will process when generating output. Using this feature, you can train ZenoChat for specific tasks and even chat with your documents. Moreover, ZenoChat will cite the data it uses when generating output. This way, you can quickly review the cited document to double-check the accuracy of the output.

Ask Zeno Instead?

To get accurate information in search engines, you need to visit dozens of websites and read their content. However, thanks to ZenoChat, you can complete all your research in a conversational format without wasting time on search engines.

Thanks to the ZenoChat web search feature, it can analyse the latest internet data and generate accurate answers to your questions. This way, you can save time by asking ZenoChat instead of searching the entire internet to access information!

%20(17).png)

%20(13).png)

%20(12).png)

%20(11).png)