In an age where artificial intelligence integrates seamlessly into our daily tasks, the efficiency of our interactions with these advanced systems has become paramount. The bridge between human intent and machine understanding is built through a process both subtle and crucial—this is where we enter the world of prompt engineering in AI. It's a field that combines linguistic skill with technical knowledge to achieve more effective communication with AI. Let's explore this concept further and understand its significance in the modern technological landscape.

TL;DR

- AI integration in daily tasks emphasizes the need for efficient interactions with advanced systems.

- Prompt engineering in AI merges linguistic skill with technical knowledge for effective AI communication.

- A prompt in AI is a text-based instruction that helps produce specific outcomes from language models.

- Prompt engineering involves crafting inputs to optimize AI's responses.

- The evolution of comprehensive language models like GPT-3 and GPT-4 has underlined the importance of prompt engineering.

- Prompt engineering has led to the emergence of 'Prompt Engineers' or 'AI whisperers.'

- Essential prompt engineering techniques include zero-shot, few-shot, chain-of-thought, generated knowledge prompting, and retrieval-augmented generation.

- Prompt engineering applications vary from simple directive prompts to complex instructional prompts and creative prompts.

- Prompt engineering also involves contextual prompts that ensure continuity in expanding text.

- Image generation prompts in AI convert detailed textual descriptions into visuals.

- Different types of image generation prompts cater to landscape visualization, character concept art, product design mockups, and concept art for fiction.

- You can start learning prompt engineering through our YouTube Channel.

What is prompt engineering in AI?

The concept of prompt engineering arises within the realm of AI, where text-based input given through command lines is carefully crafted and fine-tuned in such a manner that the AI yields the most proficient outcomes. It has a special application in generative AI platforms requiring textual input, such as expansive language models, engaging chatbots, and imaginative text-to-image models. Hence, the role of a prompt engineer has taken shape out of the need for competent prompt engineering.

What is an AI prompt?

An AI prompt establishes a communicative channel between a user and a sophisticated language model, guiding the model to produce a specific type of output. This interface might manifest as queries, textual entries, coding excerpts, or illustrative cases.

How does prompt engineering work?

Prompt engineering focuses on developing and honing the directives issued to an AI in the form of text and context, ensuring the AI's outputs are optimal. The terminology ‘prompt’ especially refers to the text-based instruction fed into generative AI systems such as extensive language models (LLMs), interactive chatbots, or creative text-to-image models such as Midjourney.

In light of the introduction of comprehensive language models like GPT-3 or GPT-4, and the creation of chatbot systems like ChatGPT based on them, along with robust text-to-image models such as DALL-E, Stable Diffusion, or Midjourney, the significance of prompt engineering has become increasingly paramount. Consequently, a new professional nomenclature has emerged in the form of 'Prompt Engineer,' occasionally referred to in a more relaxed tone as an 'AI whisperer' or 'AI interpreter.'

Prompt engineering has gained momentum as the essence of the output generated by AI is intimately linked to the textual task directives and guidelines provided through command line interfaces. For AI to grasp the assignment correctly and to furnish information and context adequate enough to achieve the task, it's imperative that it does so to align with the user's expectations and to be of genuine utility. Prompt engineering is fast evolving within this dynamic and burgeoning field.

How to learn prompt engineering?

Skills or experience in machine learning can benefit your work as a prompt engineer. For example, machine learning can be used to predict user behavior based on how users have interacted with a system in the past. Prompt engineers can then finesse how they prompt an LLM to generate material for user experiences.

Alternatively, you can start learning prompt engineering through our YouTube Channel. We offer several videos teaching you how to craft efficient prompts for LLMs.

Best Practices of Prompt Engineering

Create direct and understandable instructions

Ensure your guidance is precise and intelligible. The more clearly you articulate your needs, the better the model can serve you. For succinct responses, request brevity. For more advanced discourse, specify the requirement for expert-level content. Should you find the format unsuitable, provide a preferred example for the model to emulate. Direct instructions increase the likelihood of receiving your desired outcome.

Supply Sufficient References

Be aware that AI language models have the capacity to produce inaccurate information, particularly with obscure subjects or when prompted for sources. Just as notes can enhance a student's exam performance, equipping the model with reference texts, URLs, or documents can significantly reduce the chances of incorrect information.

Break Down Complex Tasks

Approach complex inquiries strategically by breaking them into smaller, more manageable parts. This mirrors best practices in software engineering, where systems are built modularly. Simplifying the task increases the accuracy of the model and allows for the creation of a step-by-step workflow, each stage building on the previous one.

Test your prompts systematically

For a consistent improvement in performance, it's imperative to test prompts systematically. Sometimes an amendment may improve a single case but could be detrimental when applied broadly. It's recommended to continually evaluate prompts across various scenarios, establishing templates for the prompts that consistently yield the most accurate and useful responses.

Example of a quality prompt:

Create a concise summary written in professional tone on the topic of sustainable energy solutions. The summary should be no longer than 300 words. Please include the latest trends in solar and wind power, and make sure to cite recent statistics from the IEA (International Energy Agency) 2023 report. The output format should be a bullet-point list.

Analysis of this prompt:

Create a concise summary written in professional tone on the topic of sustainable energy solutions (Clear instructions defining the tone and the topic). The summary should be no longer than 300 words (Sets a specific length requirement). Please include the latest trends in solar and wind power, and make sure to cite recent statistics from the IEA (International Energy Agency) 2023 report (Guides the model to include specific content and to use factual reference to enhance accuracy). The output format should be a bullet-point list (Clearly states the preferred format)

Prompt Engineering Techniques

Now that you have an understanding of prompt engineering and the practical project that you’ll be working with, it’s time to dive into some common prompt engineering techniques. In this section, you’ll learn how to apply the following techniques to your prompts to get the desired output from the language model.

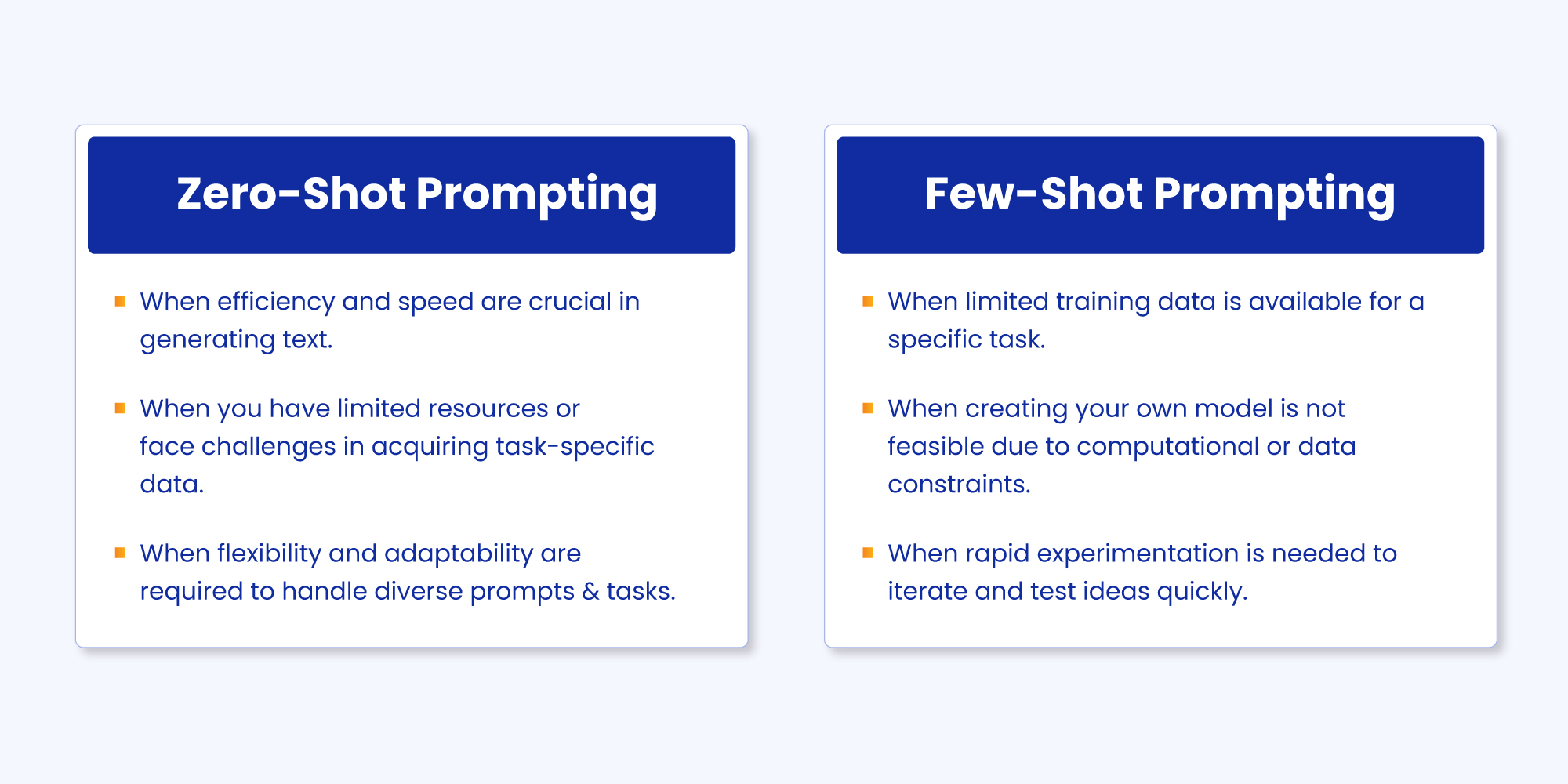

Zero-shot Prompting

Asking the language model a normal question without any additional context. Note that in the prompt above we didn't provide the model with any examples of text alongside their classifications, the LLM already understands "sentiment" -- that's the zero-shot capabilities at work. When zero-shot doesn't work, it's recommended to provide demonstrations or examples in the prompt which leads to few-shot prompting. In the next section, we demonstrate few-shot prompting.

Example Input: "What is the sentiment of the following text: 'I feel like dancing whenever I hear this song!'"

Example Output: "The sentiment of the text is positive."

Few-shot Prompting

Conditioning the model on a few examples to boost its performance. While large-language models demonstrate remarkable zero-shot capabilities, they still fall short on more complex tasks when using the zero-shot setting. Few-shot prompting can be used as a technique to enable in-context learning where we provide demonstrations in the prompt to steer the model to better performance. The demonstrations serve as conditioning for subsequent examples where we would like the model to generate a response.

Example Input: "Generate a product review for this new phone based on these positive and negative examples: Positive: I really liked the phone's camera, it took amazing pictures! Negative: The battery life on this phone is terrible, it doesn't last long enough."

Example Output:"This phone's camera is fantastic! The pictures are always crystal-clear and have vibrant colors. However, the battery life is not great and only lasts a few hours."

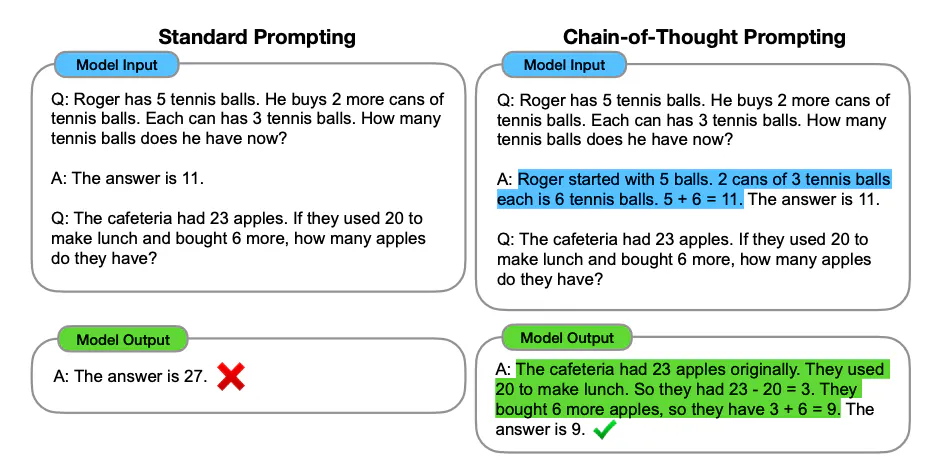

Chain-of-Thought Prompting

Introduced in Wei et al. (2022), chain-of-thought (CoT) prompting enables complex reasoning capabilities through intermediate reasoning steps. You can combine it with few-shot prompting to get better results on more complex tasks that require reasoning before responding.

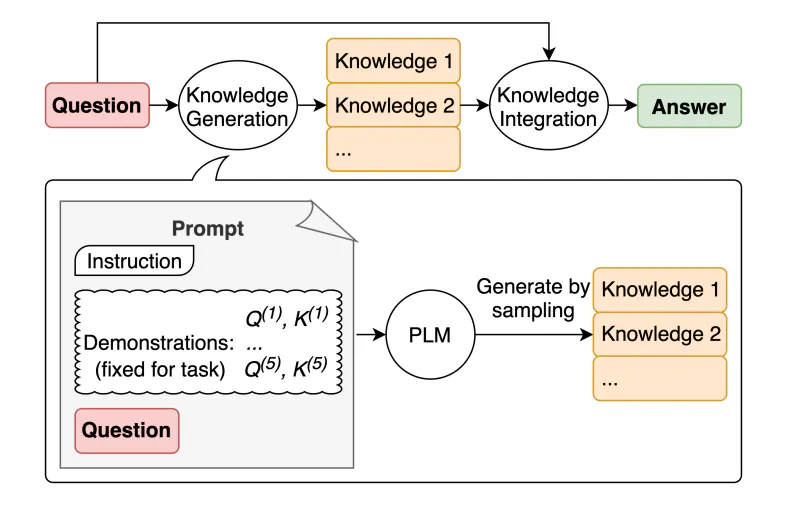

Generated Knowledge Prompting

Generated knowledge prompting involves leveraging external knowledge bases or generated content to enhance the model’s responses. By incorporating relevant information into prompts, models can provide detailed and accurate answers or generate content based on acquired knowledge.

Let's see how it works in practice within the following context:

Context:

Educational psychology has long examined the impact of mnemonic devices on learning and memory. A question arises: Can utilizing mnemonic devices improve the retention of information in students?

Starting Prompt:

Does the use of mnemonic devices in education help students retain information better?

Generated Knowledge Prompts:

Input: Mnemonic devices are tools that help people remember information.

Knowledge: Mnemonic devices leverage patterns of letters, ideas, or associations that assist in the memory process. Effective mnemonic strategies include the creation of acrostics, chunking information, and the method of loci. These techniques engage both linguistic and spatial memory systems, which can lead to improved retention of information.

Input: Educational psychology studies how people learn.

Knowledge: Educational psychology is an interdisciplinary field that combines theories and methodologies from psychology, sociology, and philosophy to understand the cognitive and affective processes involved in learning. It applies this understanding to instructional design, curriculum development, and classroom management, aiming to optimize learning outcomes.

Now, we can combine this generated knowledge to address the initial prompt with a more informed output.

Integrated Knowledge-based Prompt:

"Question: Does the use of mnemonic devices in education help students retain information better?

Knowledge 1: Mnemonic devices use patterns of letters, ideas, or associations to aid memory. Techniques such as acrostics, chunking, and the method of loci engage multiple memory systems.

Knowledge 2: Educational psychology seeks to optimize learning outcomes by understanding cognitive and affective learning processes and applying this to pedagogic strategies.

Explain and answer."

Answer:

"Yes, the use of mnemonic devices in education supports better retention of information. These tools engage both linguistic and spatial memory systems, which educational psychology identifies as key cognitive processes in learning. By using methods like acrostics or the method of loci, educators can provide students with strategies to recall information more effectively, leading to higher learning outcomes as researched and supported within the field of educational psychology."

This example showcases how generating knowledge based on relevant information can provide a comprehensive and nuanced response to complex educational questions.

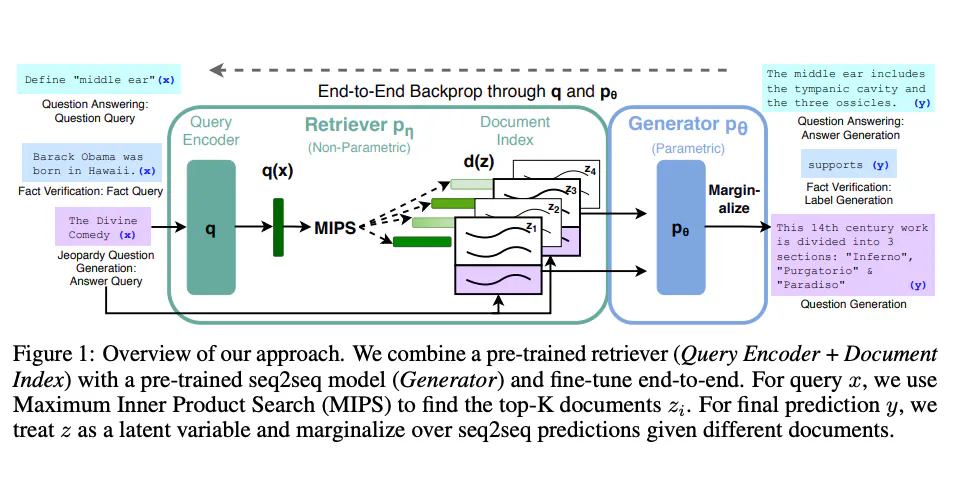

Retrieval Augmented Generation (RAG)

Standard language models are adaptable and can be honed for an array of typical tasks like sentiment analysis or the identification of named entities. These operations usually do not necessitate the incorporation of extra factual information.

However, for tasks that are more intricate and demand extensive knowledge, one can construct a system using language models that draw upon additional, external sources of information. This approach enhances the factual accuracy, bolsters the dependability of the responses provided by the model, and serves to reduce instances of "hallucination" in the output. Here is a small example of a RAG implementation on ZenoChat working with an external CSV file:

Prompt Engineering Examples

Prompt engineering is applied in a diversity of scenarios to guide AI performance. Here, we will review some tangible examples that illuminate the art and science of structuring prompts effectively.

Simple Directive Prompts

For straightforward tasks, such as asking the model to generate a list or to respond to a direct query, simple directive prompts are sufficient. These prompts are clear, concise, and to the point.

Input: "List the seven wonders of the modern world."

Output: "The seven wonders of the modern world include: 1. Chichén Itzá in Mexico, 2. Christ the Redeemer Statue in Brazil, 3. The Great Wall of China in China, 4. Machu Picchu in Peru, 5. Petra in Jordan, 6. The Roman Colosseum in Italy, and 7. The Taj Mahal in India."

Complex Instructional Prompts

When dealing with more intricate requests, such as drafting a comprehensive essay or devising a multi-step algorithm, complex instructional prompts come into play. These prompts provide detailed information, setting up expectations for the AI to follow.

Input: "Write a comprehensive essay on the impact of climate change on marine biodiversity, including at least three key effects, potential solutions, and a conclusion."

Output: "Climate change has had a profound impact on marine biodiversity, with rising temperatures, ocean acidification, and sea-level rise altering the health and distribution of marine species. Key effects include coral bleaching, shifts in species distributions, and changes in breeding times..."

Creative Conceptualization Prompts

Sometimes, the goal is to nudge the AI towards creating original content, such as stories, poems, or visual artwork. Creative conceptualization prompts rely on evocative language and vivid descriptions to spark the model's ingenuity.

Input: "Create a story about a detective who can communicate with animals to solve mysteries. Start with the detective getting a cryptic message from a parrot about a missing prized racehorse."

Output: "Detective Eliza Thornberry's morning was interrupted by the frantic squawks of her informant, a scarlet parrot named Pascal. 'The swift steed has vanished before dawn,' he squawked. Known for her unique ability to commune with the animal kingdom, Eliza knew this message could only mean one thing: the famous racehorse, Lightning Hoof, had been stolen..."

Contextual Continuation Prompts

To continue a piece of text in the same style or to expand on a topic with continuity, contextual continuation prompts are utilized. They ensure the AI recognizes the preceding content and maintains the thread in subsequent contributions.

Input: "Continue the following text in the style of a suspense novel: 'As the clock struck midnight, a shadow slipped through the cracked window. The safe, thought to secure the family's century-old secret, was now wide open.'"

Output: "The once quiet room thrummed with the tension of the newfound breach. Heart racing, the intruder’s eyes scanned the dimly lit interior, the moonlight glinting off the metal safe’s exposed contents. With the family's forbidden history within reach, the mystery poised on the edge of revelation. Little did they know, another pair of eyes watched from the darkness, witness to the unfolding conspiracy."

These are just a few illustrations of how prompt engineering shapes the dialogue between humans and AI, ultimately influencing the effectiveness of the algorithm's output.

As a prompt engineer, mastering these techniques and recognizing when to apply them can significantly enhance the interactivity and utility of AI-generated content.

Image Generation Prompts

Prompts for image generation guide AI to convert textual descriptions into compelling visuals. The key is to provide enough detail to help the model envision and render the concept accurately.

Illustrative Visualization Prompts

Input: "Design an image of a serene landscape featuring a small cottage by a lake, with mountains in the background shrouded in mist, during the golden hour of sunset."

Character Concept Art Prompts

Input: "Create an image of a character concept for a steampunk inventor, complete with Victorian-era clothing adorned with brass gadgets, holding a custom-made wrench, with goggles resting on their hat."

Product Design Mockup Prompts

Input: "Generate an image of a sleek, modern smartphone design with a curved glass display, bezel-less screen, and an interactive AI assistant on the display."

Concept Art for Fiction Prompts

Input: "Depict an image of a bustling alien market scene set on a space station, with various intergalactic species trading exotic goods under neon signs in an array of alien scripts."

%20(22).png)

%20(13).png)

%20(12).png)

%20(11).png)