While AI continues to develop at full speed, Apple has entered this sector and discovered different and new approaches to training an MLLM (Multimodal Large Language Model). While training the MM1 model, Apple offered a more effective method by customizing the traditional Multimodal Large Language Model training process. This method involves the effective use of large amounts of data sets with appropriate parameters and hyperparameters. If you are wondering how the MM1 model works, you are in the right place!

In this article, we will explore what the MM1 model is and how it works.

Ready? Let's dive in!

TL; DR

- Apple's MM1 is a multimodal large language model developed with a unique pre-training and fine-tuning approach.

- The Apple MM1 model was released with three different parameter sizes: 3B, 7B, and 30B.

- While the Apple MM1 model outperforms the GPT-4 and Gemini Ultra models in some benchmarks, it lags behind by a small margin in others.

- During the training of the Apple MM1 model, image encoders, vision-language connectors, and trained data phases were redesigned.

- If you need an AI assistant to support you in both daily and professional life, look no further than TextCortex.

- TextCortex aims to automate your workload and boost your productivity with its unique features and advanced AI capabilities.

What is Apple MM1?

Apple's MM1 model is a Multimodal Large Language Model (MLLM) designed to complete visual-based tasks at high performance and minimum system requirements. This model was developed with a unique and new approach to the MLLM training process. This approach aims for maximum efficiency with minimum parameters.

Apple’s MM1 Model Sizes

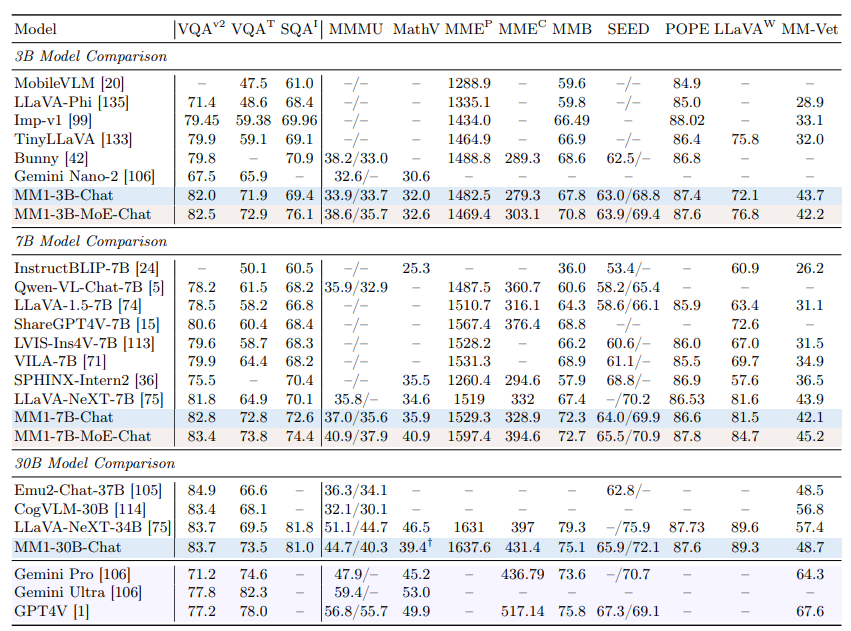

The Apple MM1 multimodal large language model has been scaled up to three different sizes, each with varying amounts of parameters: 3B, 7B, and 30B. Furthermore, these models have been scaled using a mixture-of-experts (MoE) approach. This scaling is the crucial factor behind MM1's unparalleled performance during both the pre-training and fine-tuning stages.

Is MM1 Better Than ChatGPT?

Thanks to its high performance in the pre-training and fine-tuning phases, the Apple MM1 model can compete with models such as GPT-4V and Gemini Ultra and even outperform them in some benchmarks. For example, the MM1 30B model managed to outperform both the GPT-4 Vision model and the Gemini Ultra model in the VQAv2 benchmark. When it comes to other benchmarks, it is possible to see that the MM1 model has slightly lower performance than the Gemini Ultra and GPT-4 models.

How Can I Access MM1?

Since Apple has not yet made the MM1 model publicly available, it is not possible for us to experience it in any way. However, Apple research lab has stated that all MM1 sizes will be available soon.

How Does Apple’s MM1 Work?

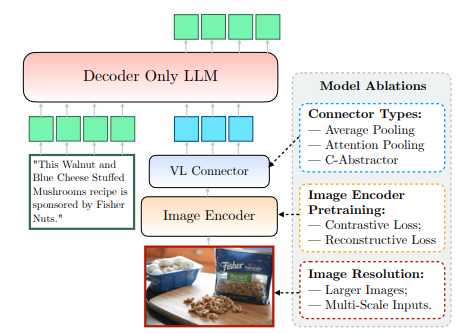

Apple's MM1 model, which is not yet publicly available, has been trained in a new and unique way, going beyond traditional methods. According to Apple's research lab, in order to train a model with maximum efficiency, it is necessary to examine the complex relationship between MLLM's architectural design and the integration of diverse datasets. This method aims to get maximum efficiency with minimum parameters. We can clearly access this information from the paper shared by Apple. Let's take a closer look at how Apple's MM1 works.

Image Encoder

While training the MM1 model, the Apple Research Lab discovered that image resolution has the biggest impact on the training process. The MM1 model was trained with images having a resolution of 378x378 pixels. This training process was completed using the ViT-H (Vision Transformer – Huge) and CLIP (Contrastive Language-Image Pretraining) deep learning models. While the ViT-H model is designed by Google for image classification, CLIP is useful for embedding images designed by OpenAI.

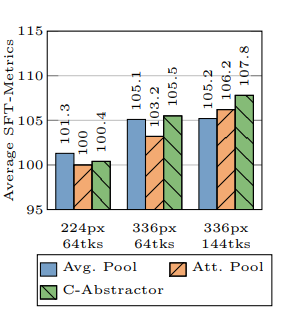

Vision-Language Connector

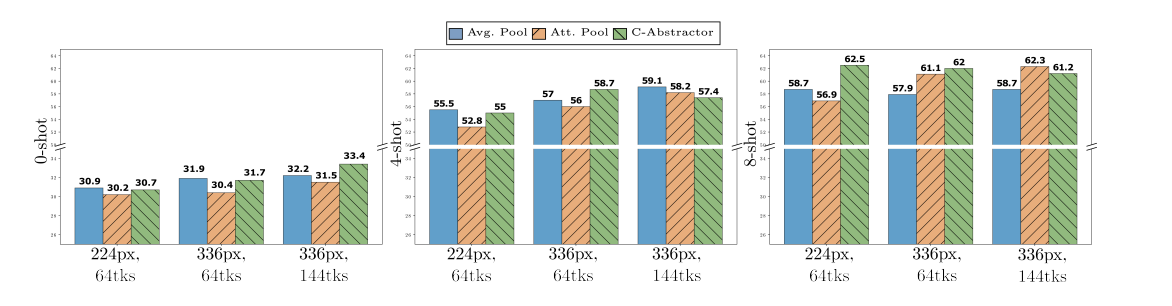

According to Apple Research Lab, when developing an MLLM, if you want it to provide high performance in visual-based tasks, the resolution of an image and the number of visual tokens are essential to take the training process to the next level. Apple research lab used a VL connector with 144 tokens when developing the MM1 model.

Which Data Was Apple MM1 Trained On?

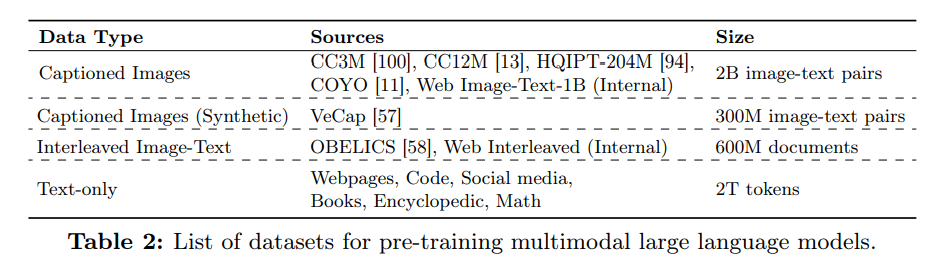

The trained data of a Multimodal Large Language Model (MLLM) serves as both its memory and the knowledge it uses to generate output. Therefore, the more diverse and extensive the trained data of an MLLM, the more concise output it can produce.

Apple's research laboratory utilized a significant amount of data consisting of text and images to enhance the zero-shot and few-shot performance of the MM1 model. As per Apple's article, the MM1 model is trained using a data variety that includes 45% interleaved image-text documents, 45% image-text pair documents, and 10% text-only documents.

%20(6).png)

%20(13).png)

%20(12).png)

%20(11).png)