Meta AI introduced an LLM that approaches current AI technology by announcing the Llama 3 model on April 18, 2024. Although the LLama 3 model is more advanced than its predecessor, the LLama 2 model, it is overall a competitor to mid-level Large Language Models such as GPT-3.5 and Claude 3 Sonnet. Meta AI's LLama 3 model promises improved reasoning, more trained data and language understanding compared to its predecessor. If you are wondering about the Llama 3 model, you are in the right place!

In this article, we will explore the Llama 3 model and its features together.

If you're ready, let's start!

TL; DR

- The Llama 3 model is a large language model developed by Meta AI and announced on April 18, 2024.

- Llama 3 comes in two model sizes suitable for different use cases: 8B and 70B.

- You can visit Meta AI's official website to access the Llama 3 model, but Llama 3 is not currently available in all countries.

- If you want to access the Llama 3 model worldwide, ZenoChat by TextCortex is your saviour.

- The Llama 3 model managed to outperform its rivals, Gemini Pro 1.5, and Claude 3 Sonnet models, in benchmarks.

- The Llama 3 model is trained with specially filtered, high-quality publicly available data.

- If you are looking for a better conversational AI than Llama 3, ZenoChat by TextCortex is designed for you with its customization options and text and image output capabilities.

Meta AI’s Llama 3 Review

The Llama 3 model is a large language model introduced by Meta AI on April 18, 2024. The Llama 3 model offers improved features and output generation capabilities compared to its predecessor. The Llama 3 model aims to offer state-of-the-art performance in a wide range of industries with its improved reasoning, finely-tuned language understanding, and new capabilities.

Another goal of Meta AI when developing the Llama 3 model is to improve its helpfulness. Meta AI aimed to boost the efficiency of the Llama 3 model by publicizing it to the community and AI developers while it was under development. Meta AI's future plans for the Llama 3 model include making it multilingual and multimodal, increasing the context window, and improving its performance.

Llama 3 Model Sizes

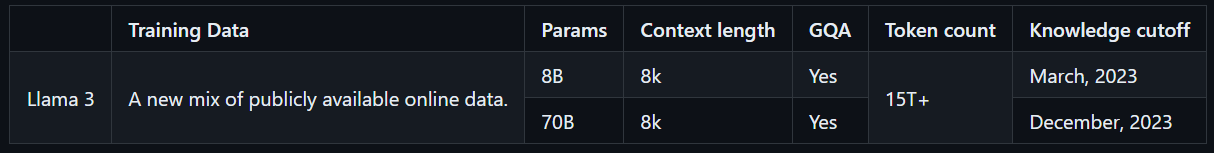

Llama 3 comes in two model sizes that are suitable for different use cases: 8B and 70B. The Llama 3 8B model is more compact, with a total of 8 billion parameters, and can generate output faster. On the other hand, the Llama 3 70B model has a total of 70 billion parameters and is suitable for complex tasks. Additionally, the Llama 3 8B model is trained with publicly available online data until March 2023, while the Llama 3 70B model is trained with publicly available online data until December 2023.

How to Access Llama 3?

To access the Llama 3 model, you can visit Meta AI's official website, click the "Get Started" button, and follow the steps. However, since the Llama 3 model is not accessible worldwide, it has a low availability score, unlike other LLMs. However, there are ways for you to experience the Llama 3 model worldwide.

With ZenoChat by TextCortex, you can access and experience both sizes of the Llama 3 model without any limits. ZenoChat will support Llama 3 8B and 70B LLMs in addition to GPT-4, Sophos 2, Mixtral, Claude 3 Opus and Sonnet LLMs. That said, you can freely experience the Llama 3 model without Meta AI's country restrictions.

Meta AI’s Llama 3 Core Features

Llama 3, Meta AI's newest and most advanced model, has higher performance and new features than its predecessor. The Llama 3 model was introduced as a competitor not to high-end LLMs such as GPT-4 and Gemini Ultra but to more frequently used models such as GPT-3.5 and Gemini Pro. Let's take a closer look at the core features of Llama 3.

Model Performance

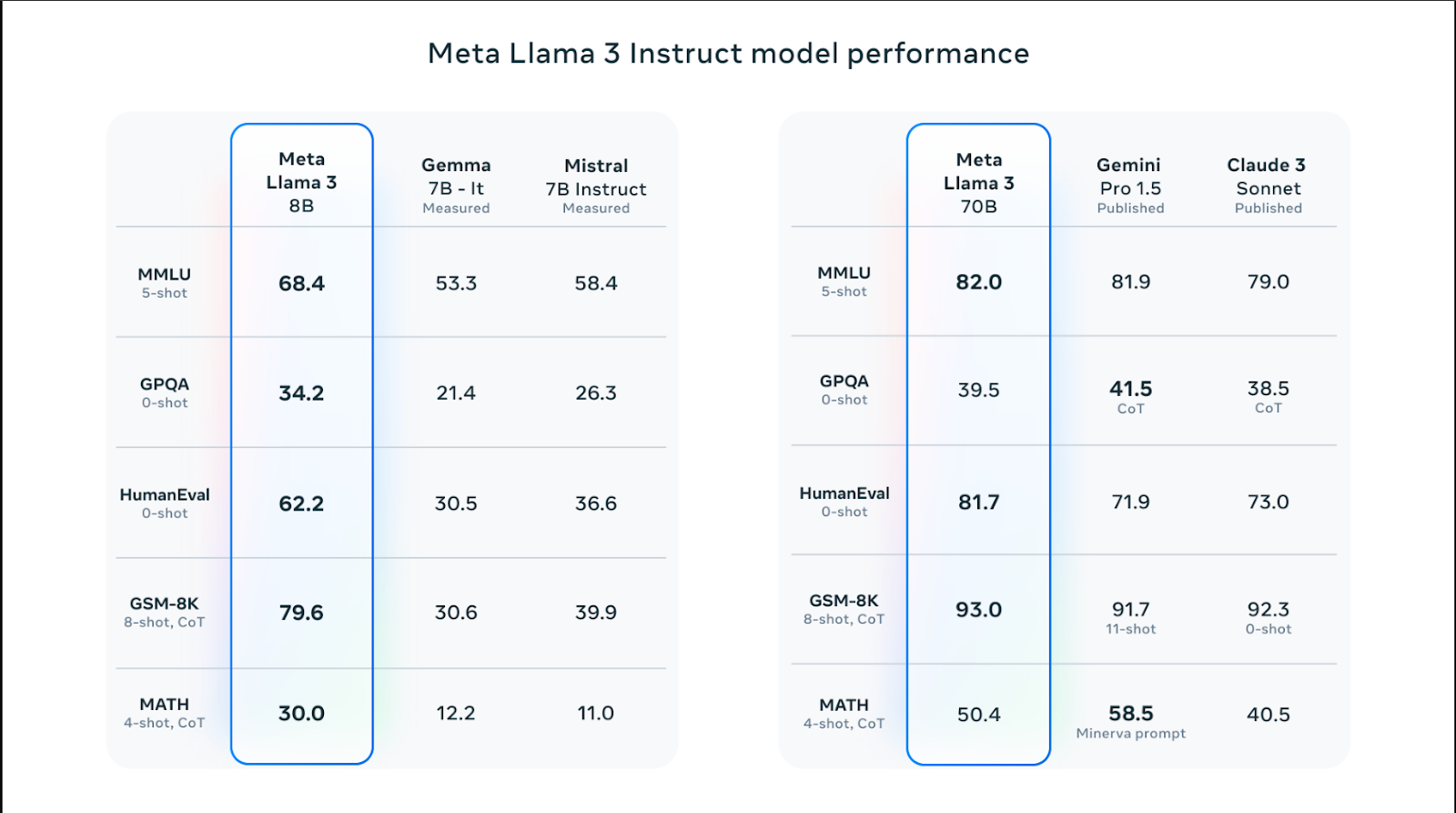

Llama 3 models come in different sizes and have better performance than Llama 2 models in skills such as reasoning, code generation, math, and instruction following. Among the Llama 3 models, the 70B model outperformed popular models like Claude 3 Sonnet and Gemini Pro 1.5 by scoring 82.0 in the MMLU benchmark. However, Llama 3 models perform better than Claude 3 Sonnet and Gemini Pro 1.5 models in all benchmarks except for the MATH benchmark, which measures math skills, and the GPQA, which measures graduate-level Q&A scores.

Llama 3 8B model managed to outperform its rival Gemma 7B and Mistral 7B models in Humaneval, MMLU, GPQA, GSM-8K and MATH benchmarks. In other words, Llama 3 model sizes have higher performance than its rival models.

Training Data

The Llama 3 model was trained with a large amount of data, which is critical to building a high-performance Large Language Model. Specifically, it was trained with 15T tokens from publicly available sources - 7 times more than the amount of data used to train the Llama 2 model.

In addition, the Llama 3 model was trained with a high-quality dataset consisting of 30 different languages other than English. This has resulted in a significant increase in the model's language understanding, creativity, and prompt-following skills.

The Meta AI team has developed a series of data filtering pipelines to train the Llama 3 model with high-quality and reliable data. These filters include parameters such as NSFW filter, heuristic filter, semantic deduplication approach, and text classifier. Moreover, since the Llama 2 model is good at identifying high-quality data, it was used to filter the trained data of the Llama 3 model.

Optimization

Llama 3 models are specially optimized for frequently used GPU and CPU models and brands. The more software is optimized for specific hardware, the higher the performance and faster it will run. The Llama 3 model is optimized for Intel, AMD, and Nvidia hardware. Moreover, Intel has published a detailed guide on the performance of the Llama 3 model.

Safety

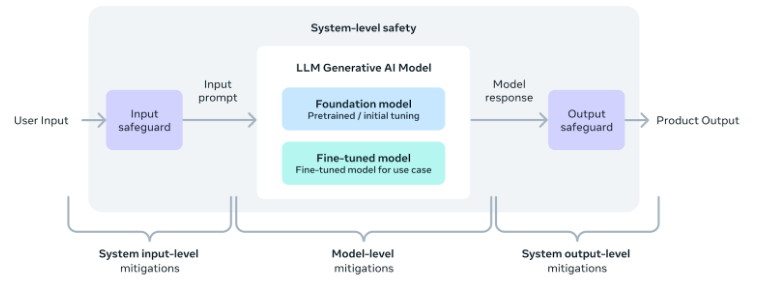

The Meta AI team recognizes the importance of safe usage of AI tools and software, including the Llama 3 model. Accordingly, safety parameters were added during the model's development to ensure that its output is safe, unharmful, and ethical.

In addition, the team has also provided a system-level safety implementation guide for those who wish to develop applications using the Llama 3 model. With these measures in place, users can enjoy the convenience and benefits of AI tools while using them in a safe and responsible manner.

A Better Alternative: ZenoChat by TextCortex

If you are looking for a customizable AI assistant with access to various LLMs, including the Llama 3 model, ZenoChat by TextCortex is designed for you. ZenoChat is an AI tool that offers its users the advantages of different LLMs and does not limit them to a single LLM. ZenoChat aims to assist its users in professional and daily tasks such as text generation, paraphrasing and research. With ZenoChat, you can speed up any task by 12x, reduce your workload and skyrocket your productivity.

ZenoChat is available as a web application and browser extension. The ZenoChat browser extension is integrated with 30,000+ websites and apps. So, it can continue to support you anywhere and anytime.

Work with your own knowledge & data

ZenoChat offers a fully customizable AI experience thanks to our "Knowledge Bases" and "Individual Personas" features. Our "Individual Personas" feature allows you to adjust ZenoChat's output style, sentence length, dominant emotion in sentences, and personality as you wish. In other words, you can use this feature to turn ZenoChat into your personal assistant or your digital twin.

Our "Knowledge Bases" feature allows you to upload or connect data sources that ZenoChat will use to generate output. By using this feature, you can train ZenoChat with your own data and make it suitable for specific use cases. Moreover, thanks to this feature, you can summarize hundreds of pages of documents with a single prompt or chat with your PDFs. Our "Knowledge Bases" feature also has a button that allows you to integrate your Google Drive or Microsoft OneDrive in seconds.

%20(8).png)

%20(15).png)

%20(14).png)